Hello, I am Zixiang Di, currently pursuing a Master’s degree in Computer Science at East China Normal University (ECNU). I received my bachelor’s degree in Computer Science from University of Shanghai for Science and Technology (USST).

My research interest includes LLM Post-training, Model Merging and Black-box Optimization. I have published 5 papers in top international AI conferences such as NeurIPS, ACL, and AAAI, including associated competitions, and have one paper conditionally accepted by a top-tier SCI Q1 journal.

I am currently seeking job opportunities related to large language models.

Feel free to reach out via email at dizixiang@163.com or aarondi1119@gmail.com if you have any relevant opportunities or ideas for collaboration!

🔥 News

-

2025.05: 🎉🎉 Two papers accepted to ACL 2025 Main Conference!

-

2025.05: 🎉🎉 One paper accepted to ACL 2025 XLLM Workshop!

-

2025.05: 🎉🎉 Awarded 3rd place 🏆 in the XLLM@ACL2025 Shared Task-III: LLM for Structural Reasoning!

-

2025.01: 🎉🎉 Invited to give a talk at the University of Toronto on our method from the NeurIPS 2024 LLM Merging Competition!

-

2024.12: 🎉🎉 One paper accepted to ACL 2025!

-

2024.12: 🎉🎉 One paper accepted to NeurIPS 2024 LMC!

-

2024.12: 🎉🎉 Awarded 3rd place 🏆 in the NeurIPS 2024 LLM Merging Competition!

📝 Publications

| * indicates my Master’s advisor |

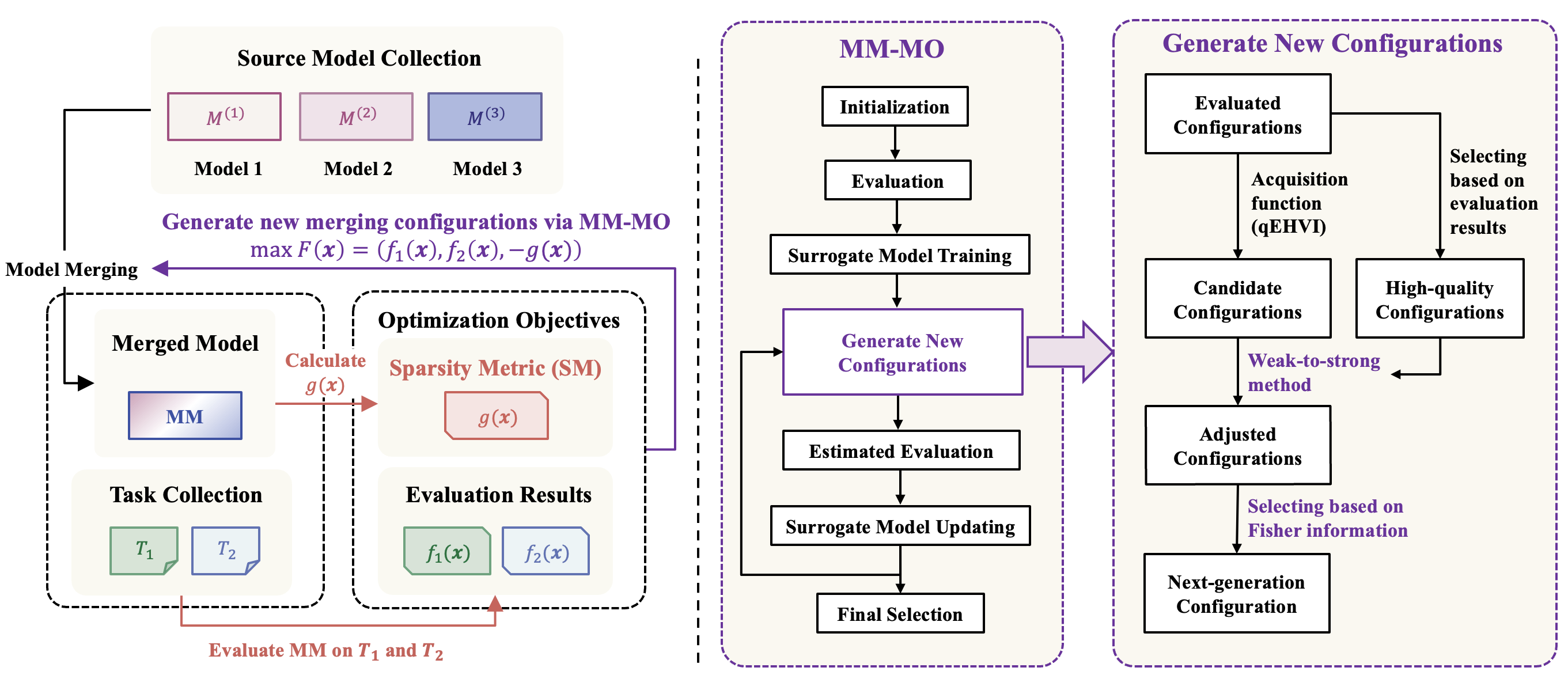

It’s Morphing Time: Unleashing the Potential of Multiple LLMs via Multi-objective Optimization

Bingdong Li*, Zixiang Di, Yanting Yang, Hong Qian, Peng Yang, Hao Hao, Ke Tang, Aimin Zhou

Arxiv

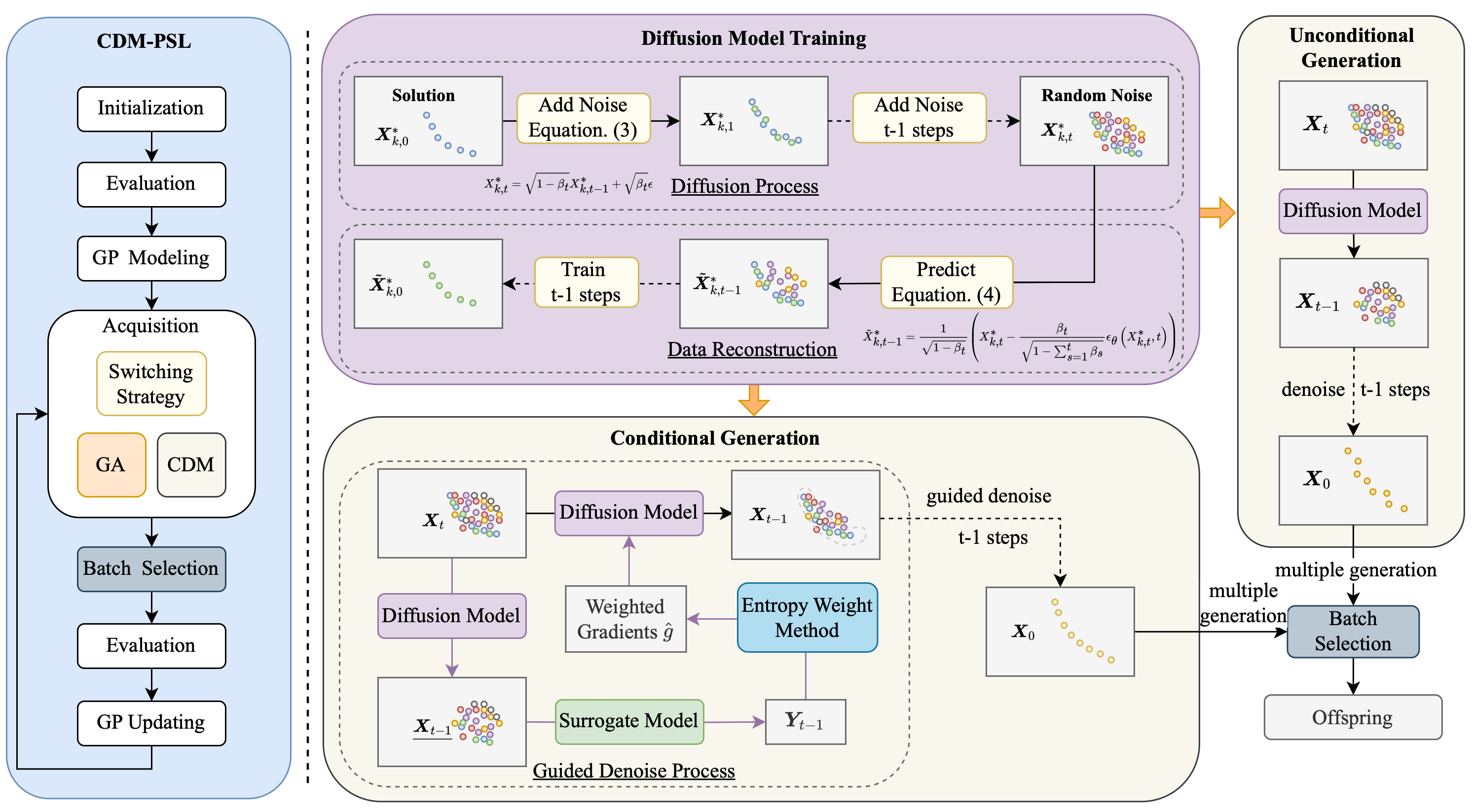

Expensive Multi-Objective Bayesian Optimization Based on Diffusion Models

Bingdong Li*, Zixiang Di, Yongfan Lu, Hong Qian, Feng Wang, Peng Yang, Ke Tang, Aimin Zhou

AAAI 2025 | Code

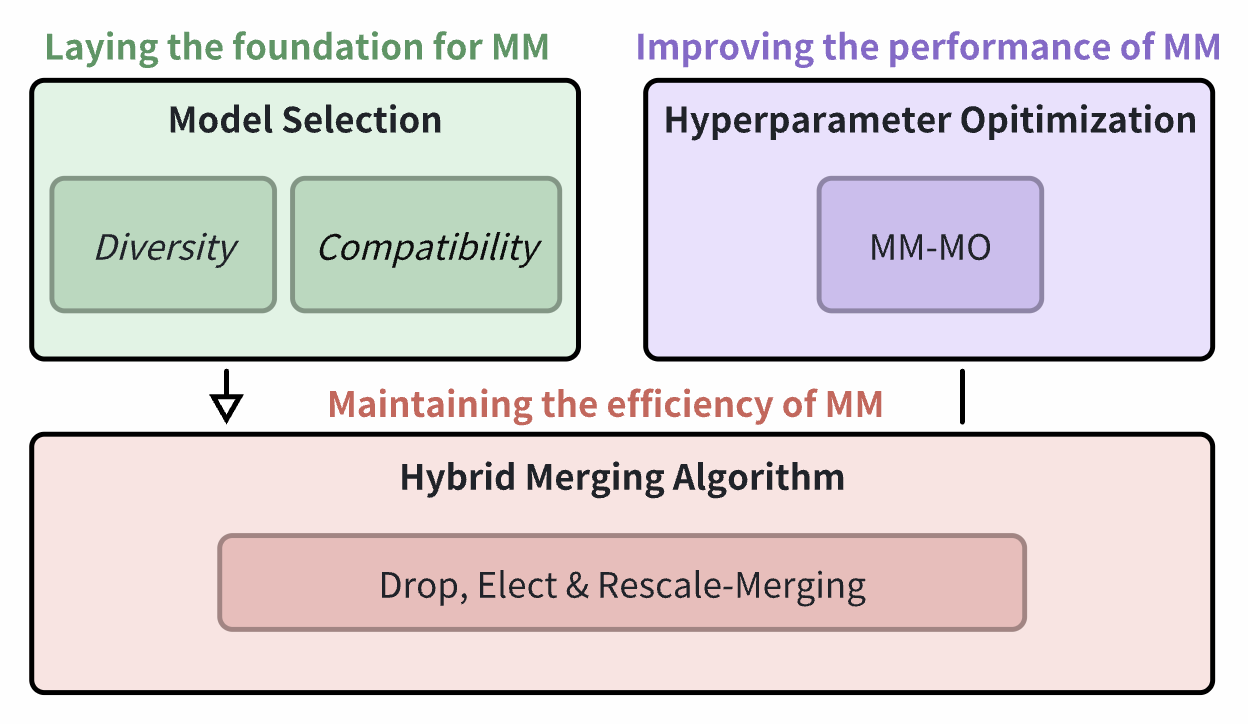

Efficient Model Merging with Strategic Model Selection, Merging, and Hyperparameter Optimization

Zixiang Di, Yaoming Yang, Mei Jiang, Bingdong Li∗, Hong Qian, Aimin Zhou

NeurIPS 2025 LMC

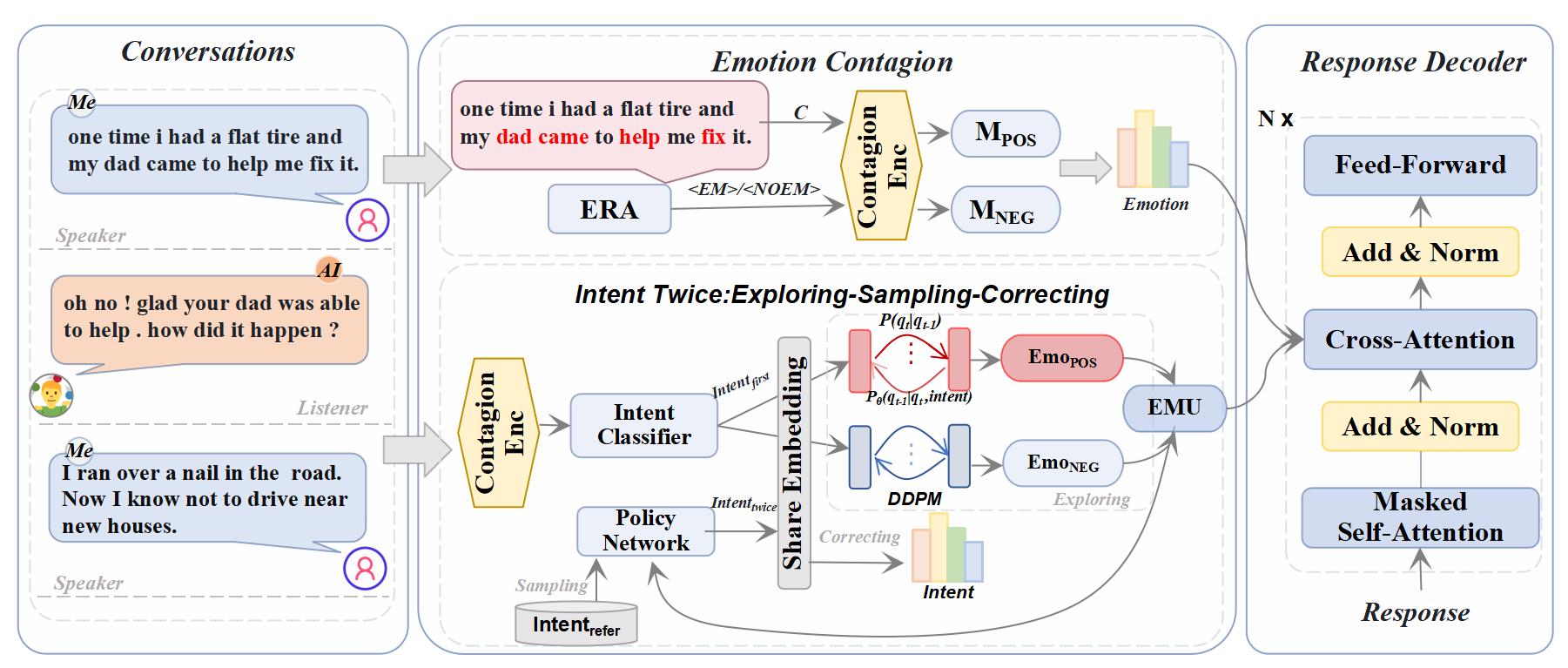

Jiahao Yuan, Zixiang Di, Zhiqing Cui, Guisong Yang, Usman Naseem

ACL 2025 Main

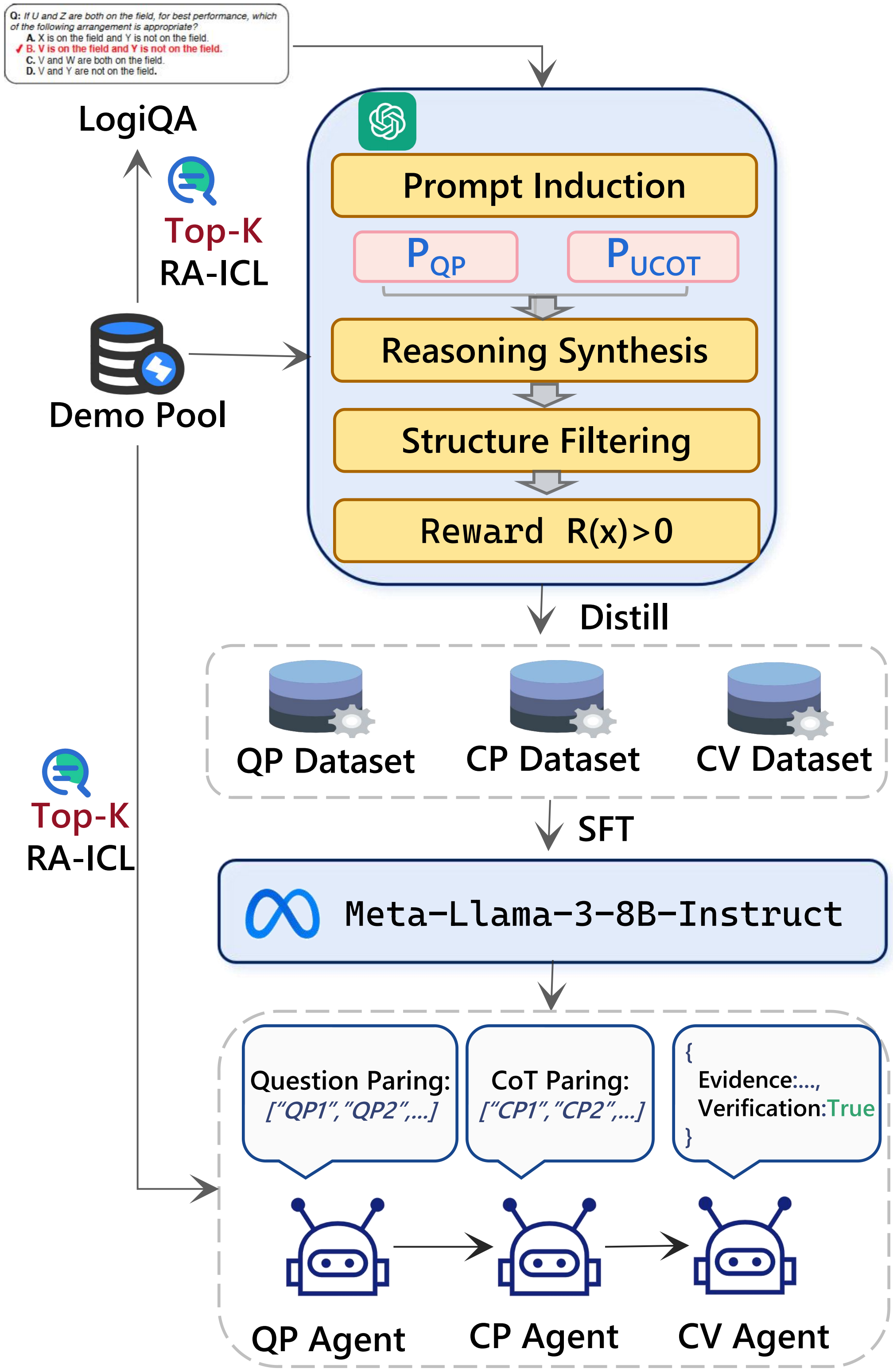

Jiahao Yuan, Dehui Du, Hao Zhang, Zixiang Di, Usman Naseem

ACL 2025 Main | Code

Jiahao Yuan, Xingzhe Sun, Xing Yu, Jingwen Wang, Dehui Du, Zhiqing Cui, Zixiang Di

ACL 2025 XLLM Workshop | Code

🎖 Honors and Awards

- 2024.09 Shanghai AI Lab: LLM Practical Training Camp, Outstanding Participant

- 2024.09 Huawei Cup: 6th China Postgraduate Artificial Intelligence Innovation Competition, National Second Prize

- 2022.05 Lanqiao Cup: C/C++ Programming, Shanghai Region, First Prize

- 2022.05 China Collegiate Programming Contest (CCPC): National Finals of Group Programming Ladder Tournament, Second Prize

- 2022.03 National Collegiate Algorithm Design and Programming Challenge, Silver Award

- 2021.12 TI Cup: National Undergraduate Electronic Design Contest, Shanghai Region, Second Prize

- 2021.12 Shanghai Government Scholarship

- 2021.12 Outstanding Student in USST

- 2019 - 2023 First-Class Scholarship for Academic Excellence in USST

📖 Educations

- 2023.09 - 2026.06, Computer Science, East China Normal University.

- 2019.09 - 2023.06, Computer Science, University of Shanghai for Science and Technology.